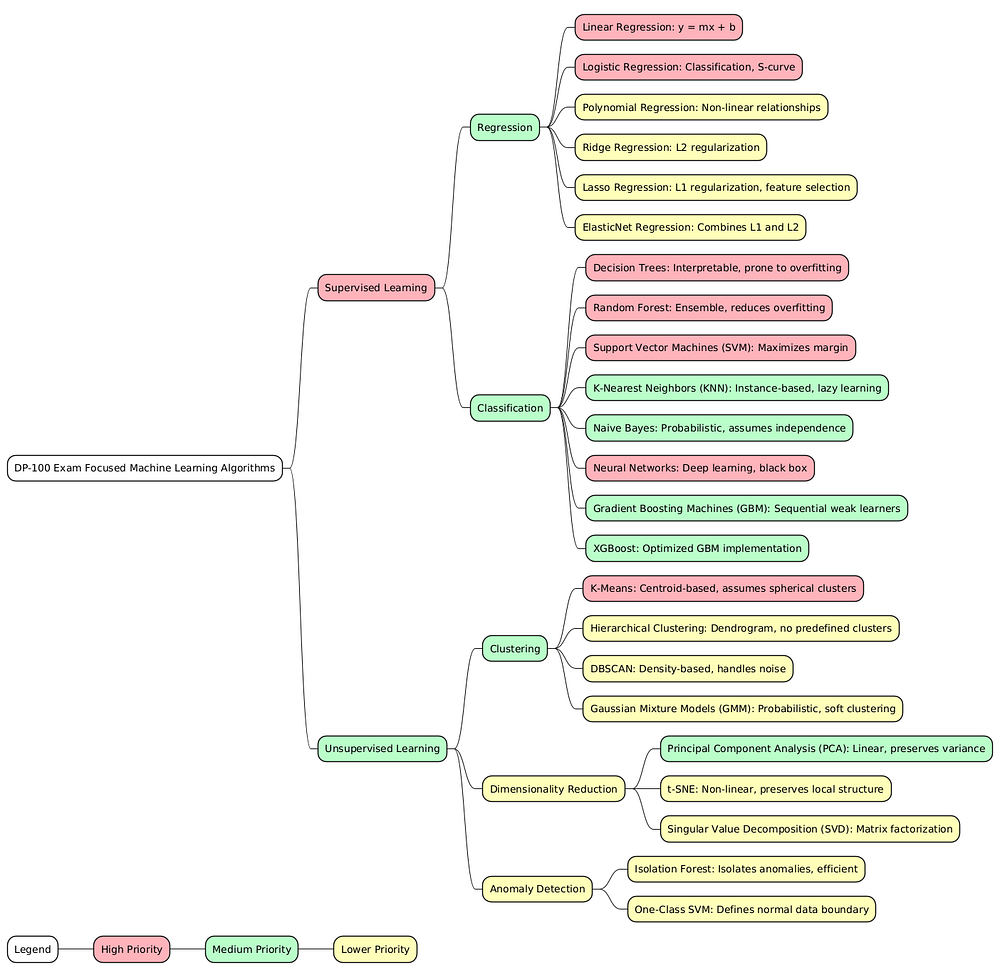

# Mindmaps: An extensive mind map focusing on Machine Learning Algorithms for the Microsoft Azure DP-100 Exam.

Following algorithms might get coverage in the Microsoft’s Azure DP-100 exam.

Here is the summary of the above mind map.

Supervised Learning

Regression

- Linear Regression: Simple and interpretable, assumes a linear relationship between features and the target.

- Logistic Regression: Used for binary classification, outputs probabilities.

- Polynomial Regression: Captures non-linear relationships, but risks overfitting.

- Ridge Regression: Uses L2 regularization to reduce overfitting.

- Lasso Regression: Applies L1 regularization, useful for feature selection.

- ElasticNet Regression: Combines L1 and L2 regularization for more robust models.

Classification

- Decision Trees: Easy to interpret but prone to overfitting.

- Random Forest: An ensemble of trees that reduces overfitting and is robust.

- Support Vector Machines (SVM): Effective in high-dimensional spaces and can use different kernels.

- K-Nearest Neighbors (KNN): Simple, instance-based, but computationally expensive for large datasets.

- Naive Bayes: Based on Bayes’ theorem, assumes feature independence.

- Neural Networks: Can model complex patterns, requires large datasets and computational resources.

- Gradient Boosting Machines (GBM): Builds models sequentially to reduce errors but can overfit.

- XGBoost: Efficient and scalable, improving GBM with regularization.

Unsupervised Learning

Clustering

- K-Means: Partitions data into k clusters but is sensitive to initial seeds.

- Hierarchical Clustering: Creates a hierarchy of clusters, no need to pre-specify the number of clusters.

- DBSCAN: Density-based, finds arbitrarily shaped clusters and is resistant to noise.

Techniques

Dimensionality Reduction

- Principal Component Analysis (PCA): Projects data onto principal components to reduce dimensionality.

- t-Distributed Stochastic Neighbor Embedding (t-SNE): Non-linear technique that preserves local structure, ideal for visualization.

Anomaly Detection

- Isolation Forest: Efficiently isolates anomalies by random partitioning.

- One-Class SVM: Learns a boundary around normal data but is sensitive to outliers.